Diagnostic Errors in Hospitalized Patients

From Brigham and Women’s Hospital, and Harvard Medical School, Boston, MA.

Strategies to Improve Measurement of Diagnostic Errors

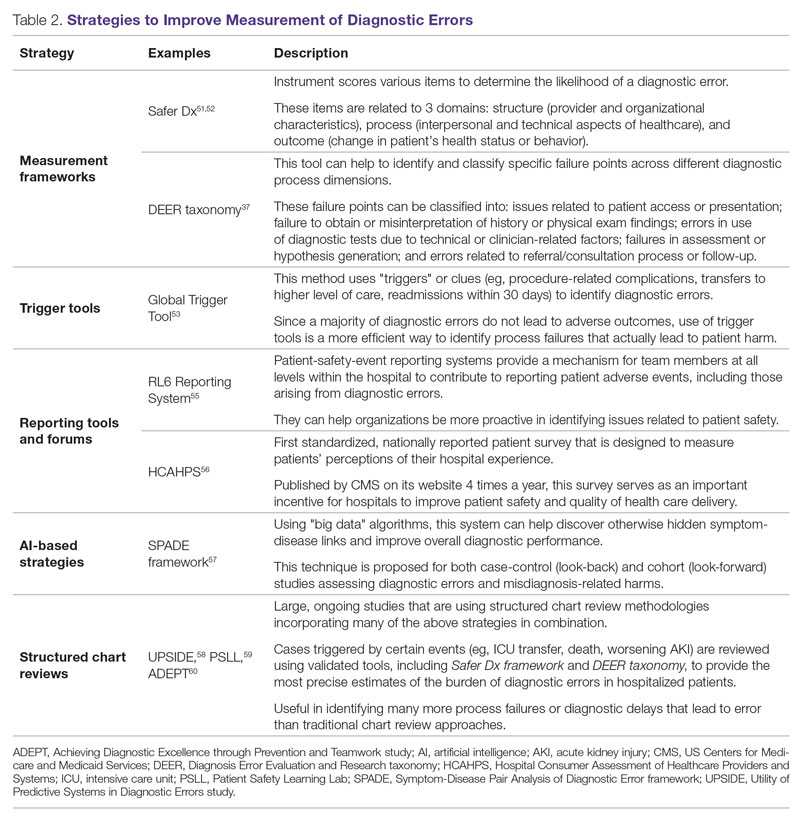

Development of new methodologies to reliably measure diagnostic errors is an area of active research. The advancement of uniform and universally agreed-upon frameworks to define and identify process failure points and diagnostic errors would help reduce measurement error and support development and testing of interventions that could be generalizable across different health care settings. To more accurately define and measure diagnostic errors, several novel approaches have been proposed (Table 2).

The Safer Dx framework is an all-round tool developed to advance the discipline of measuring diagnostic errors. For an episode of care under review, the instrument scores various items to determine the likelihood of a diagnostic error. These items evaluate multiple dimensions affecting diagnostic performance and measurements across 3 broad domains: structure (provider and organizational characteristics—from everyone involved with patient care, to computing infrastructure, to policies and regulations), process (elements of the patient-provider encounter, diagnostic test performance and follow-up, and subspecialty- and referral-specific factors), and outcome (establishing accurate and timely diagnosis as opposed to missed, delayed, or incorrect diagnosis). This instrument has been revised and can be further modified by a variety of stakeholders, including clinicians, health care organizations, and policymakers, to identify potential diagnostic errors in a standardized way for patient safety and quality improvement research.51,52

Use of standardized tools, such as the Diagnosis Error Evaluation and Research (DEER) taxonomy, can help to identify and classify specific failure points across different diagnostic process dimensions.37 These failure points can be classified into: issues related to patient presentation or access to health care; failure to obtain or misinterpretation of history or physical exam findings; errors in use of diagnostics tests due to technical or clinician-related factors; failures in appropriate weighing of evidence and hypothesis generation; errors associated with referral or consultation process; and failure to monitor the patient or obtain timely follow-up.34 The DEER taxonomy can also be modified based on specific research questions and study populations. Further, it can be recategorized to correspond to Safer Dx framework diagnostic process dimensions to provide insights into reasons for specific process failures and to develop new interventions to mitigate errors and patient harm.6

Since a majority of diagnostic errors do not lead to actual harm, use of “triggers” or clues (eg, procedure-related complications, patient falls, transfers to a higher level of care, readmissions within 30 days) can be a more efficient method to identify diagnostic errors and adverse events that do cause harm. The Global Trigger Tool, developed by the Institute for Healthcare Improvement, uses this strategy. This tool has been shown to identify a significantly higher number of serious adverse events than comparable methods.53 This facilitates selection and development of strategies at the institutional level that are most likely to improve patient outcomes.24

Encouraging and facilitating voluntary or prompted reporting from patients and clinicians can also play an important role in capturing diagnostic errors. Patients and clinicians are not only the key stakeholders but are also uniquely placed within the diagnostic process to detect and report potential errors.25,54 Patient-safety-event reporting systems, such as RL6, play a vital role in reporting near-misses and adverse events. These systems provide a mechanism for team members at all levels within the hospital to contribute toward reporting patient adverse events, including those arising from diagnostic errors.55 The Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey is the first standardized, nationally reported patient survey designed to measure patients’ perceptions of their hospital experience. The US Centers for Medicare and Medicaid Services (CMS) publishes HCAHPS results on its website 4 times a year, which serves as an important incentive for hospitals to improve patient safety and quality of health care delivery.56

Another novel approach links multiple symptoms to a range of target diseases using the Symptom-Disease Pair Analysis of Diagnostic Error (SPADE) framework. Using “big data” technologies, this technique can help discover otherwise hidden symptom-disease links and improve overall diagnostic performance. This approach is proposed for both case-control (look-back) and cohort (look-forward) studies assessing diagnostic errors and misdiagnosis-related harms. For example, starting with a known diagnosis with high potential for harm (eg, stroke), the “look-back” approach can be used to identify high-risk symptoms (eg, dizziness, vertigo). In the “look-forward” approach, a single symptom or exposure risk factor known to be frequently misdiagnosed (eg, dizziness) can be analyzed to identify potential adverse disease outcomes (eg, stroke, migraine).57

Many large ongoing studies looking at diagnostic errors among hospitalized patients, such as Utility of Predictive Systems to identify Inpatient Diagnostic Errors (UPSIDE),58 Patient Safety Learning Lab (PSLL),59 and Achieving Diagnostic Excellence through Prevention and Teamwork (ADEPT),60 are using structured chart review methodologies incorporating many of the above strategies in combination. Cases triggered by certain events (eg, ICU transfer, death, rapid response event, new or worsening acute kidney injury) are reviewed using validated tools, including Safer Dx framework and DEER taxonomy, to provide the most precise estimates of the burden of diagnostic errors in hospitalized patients. These estimates may be much higher than previously predicted using traditional chart review approaches.6,24 For example, a recently published study of 2809 random admissions in 11 Massachusetts hospitals identified 978 adverse events but only 10 diagnostic errors (diagnostic error rate, 0.4%).19 This was likely because the trigger method used in the study did not specifically examine the diagnostic process as critically as done by the Safer Dx framework and DEER taxonomy tools, thereby underestimating the total number of diagnostic errors. Further, these ongoing studies (eg, UPSIDE, ADEPT) aim to employ new and upcoming advanced machine-learning methods to create models that can improve overall diagnostic performance. This would pave the way to test and build novel, efficient, and scalable interventions to reduce diagnostic errors and improve patient outcomes.