Artificial Intelligence vs Medical Providers in the Dermoscopic Diagnosis of Melanoma

Early diagnosis of melanoma drastically reduces morbidity and mortality; however, most skin lesions are not initially evaluated by dermatologists, and some patients may require a referral. This study sought to determine the performance of an artificial intelligence (AI) application in classifying lesions as benign or malignant to determine whether AI could assist in screening potential melanoma cases. One hundred dermoscopic images (80 benign nevi and 20 biopsy-verified malignant melanomas) were assessed by an AI application as well as 23 dermatologists, 7 family physicians, and 12 primary care mid-level providers. The AI’s high accuracy and positive predictive value (PPV) demonstrate that this AI application could be a reliable melanoma screening tool for providers.

Practice Points

- Artificial intelligence (AI) has the potential to facilitate the diagnosis of pigmented lesions and expedite the management of malignant melanoma.

- Further studies should be done to see if the high diagnostic accuracy of the AI application we studied translates to a decrease in unnecessary biopsies or expedited referral for pigmented lesions.

- The large variability of formal dermoscopy training among board-certified dermatologists may contribute to the decreased ability to identify pigmented lesions with dermoscopic imaging compared to AI.

Comment

Automatic Visual Recognition Development—The AI application we studied was developed by dermatologists as a tool to assist in the screening of skin lesions suspicious for melanoma or a benign neoplasm.8 Developing AI applications that can reliably recognize objects in photographs has been the subject of considerable research. Notable progress in automatic visual recognition was shown in 2012 when a deep learning model won the ImageNet object recognition challenge and outperformed competing approaches by a large margin.9,10 The ImageNet competition, which has been held annually since 2010, required participants to build a visual classification system that distinguished among 1000 object categories using 1.2 million labeled images as training data. In 2017, participants developed automated visual systems that surpassed the estimated human performance.11 Given this success, the organization decided to deliver a more challenging competition involving 3D imaging—Medical ImageNet, a petabyte-scale, cloud-based, open repository project—with goals including image classification and annotation.12

Convolutional Neural Networks—Convolutional neural networks are computer system architectures commonly employed for making predictions from images.13 Convolutional neural networks are based on a set of layers of learned filters that perform convolution, a mathematical operation that reflects the relationship between the 2 functions. The main algorithm that makes the learning possible is called backpropagation, wherein an error is computed at the output and distributed backward through the neural network’s layers.14 Although CNNs and backpropagation methods have existed since 1989, recent technologic advances have allowed for deep learning–based algorithms to be widely integrated with everyday applications.15 Advances in computational power in the form of graphics processing units and parallelization, the existence of large data sets such as the ImageNet database, and the rise of software frameworks have allowed for quick prototyping and deployment of deep learning models.16,17

Convolutional neural networks have demonstrated potential to excel at a wide range of visual tasks. In dermatology, visual recognition methods often rely on using either a pretrained CNN as a feature extractor for further classification or fine-tuning a pretrained network on dermoscopic images.18-20 In 2017, a model was trained on 130,000 clinical images of benign and malignant skin lesions. Its performance was found to be in line with that of 21 US board-certified dermatology experts when diagnosing skin cancers from clinical images confirmed by biopsy.21

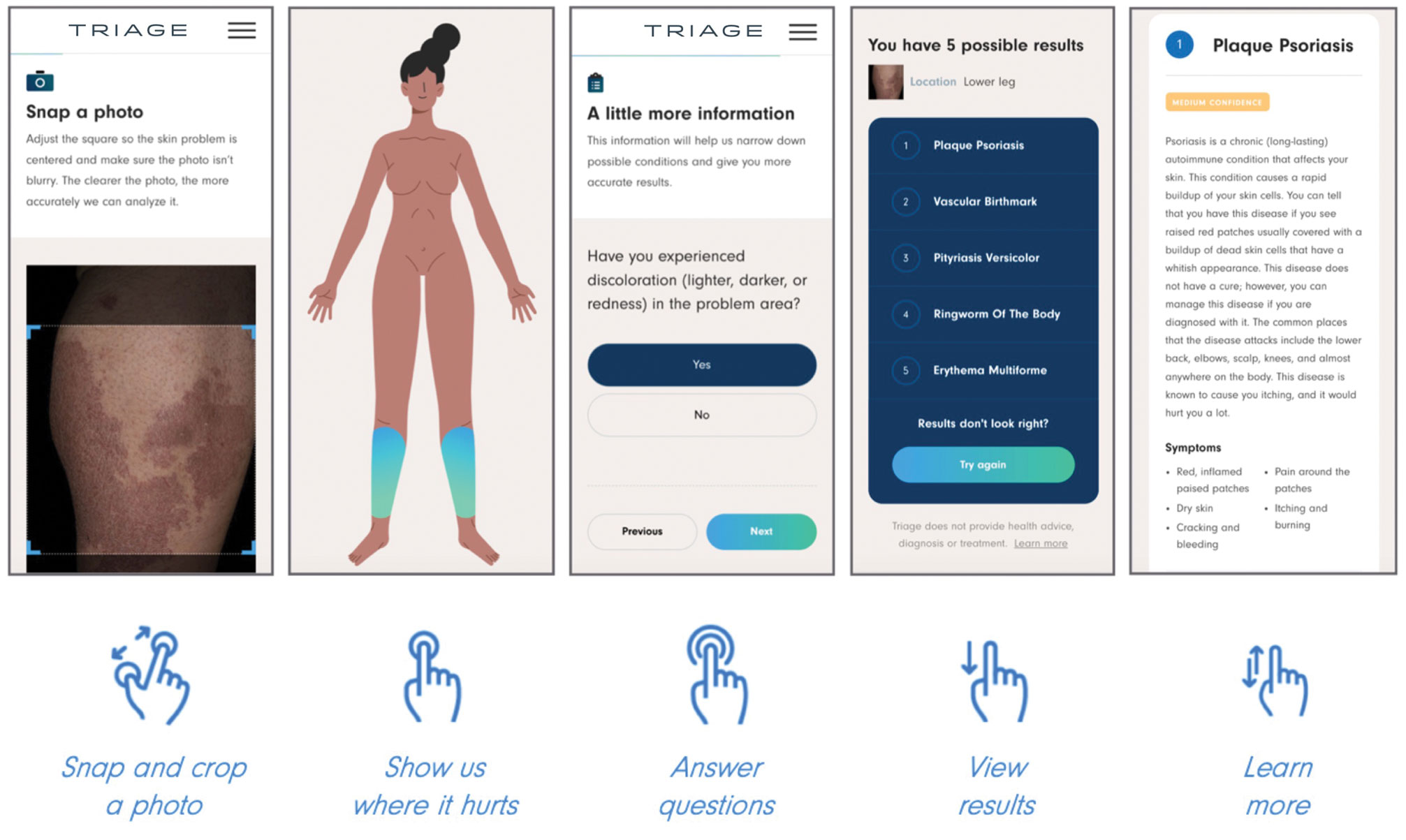

Triage—The AI application Triage is composed of several components contained in a web interface (Figure 2). To use the interface, the user must sign up and upload a photograph to the website. The image first passes through a gated-logic visual classifier that rejects any images that do not contain a visible skin condition. If the image contains a skin condition, the image is passed to a skin classifier that predicts the probability of the image containing 1 of 133 classes of skin conditions, 7 of which the application can diagnose with a dermoscopic image.

The AI application uses several techniques when training a CNN model. To address skin condition class imbalances (when more examples exist for 1 class than the others) in the training data, additional weights are applied to mistakes made on underrepresented classes, which encourages the model to better detect cases with low prevalence in the data set. Data augmentation techniques such as rotating, zooming, and flipping the training images are applied to allow the model to become more familiar with variability in the input images. Convolutional neural networks are trained using a well-known neural network optimization method called Stochastic gradient descent with momentum.22

The final predictions are refined by a question-and-answer system that encodes dermatology knowledge and is currently under active development. Finally, the top k most probable conditions are displayed to the user, where k≤5. An initial prototype of the system was described in a published research paper in the 2019 medical imaging workshop of the Neural Information Systems conference.23

The prototype demonstrated that combining a pretrained CNN with a reinforcement learning agent as a question-answering model increased the classification confidence and accuracy of its visual symptom checker and decreased the average number of questions asked to narrow down the differential diagnosis. The reinforcement learning approach increases the accuracy more than 20% compared with the CNN-only approach, which only uses visual information to predict the condition.23